In Pursuit of Perfection Salad, Part Two

Where We Left Off

In Part One, I attempted to identify recipes in my test corpus (the first 100 Iowa community cookbooks digitized out of an eventual 200) resembling the popular domestic-science-era concoction “Perfection Salad” using several common text analysis tools. By using both Voyant and AntConc I was able to find many examples, but each tool had limitations that prevented a thorough search. I had begun preparing a Python Jupyter Notebook that could search a wider context window for instances of “cabbage” co-occurring with “gelatin,” “gelatine,” or “jello.” Now I’ll walk through the steps I have taken using Python to create a list of Perfection-salad-like recipes as well as explore a few anomalies in my corpus which this process helped to uncover.

Setting up the Jupyter Notebook

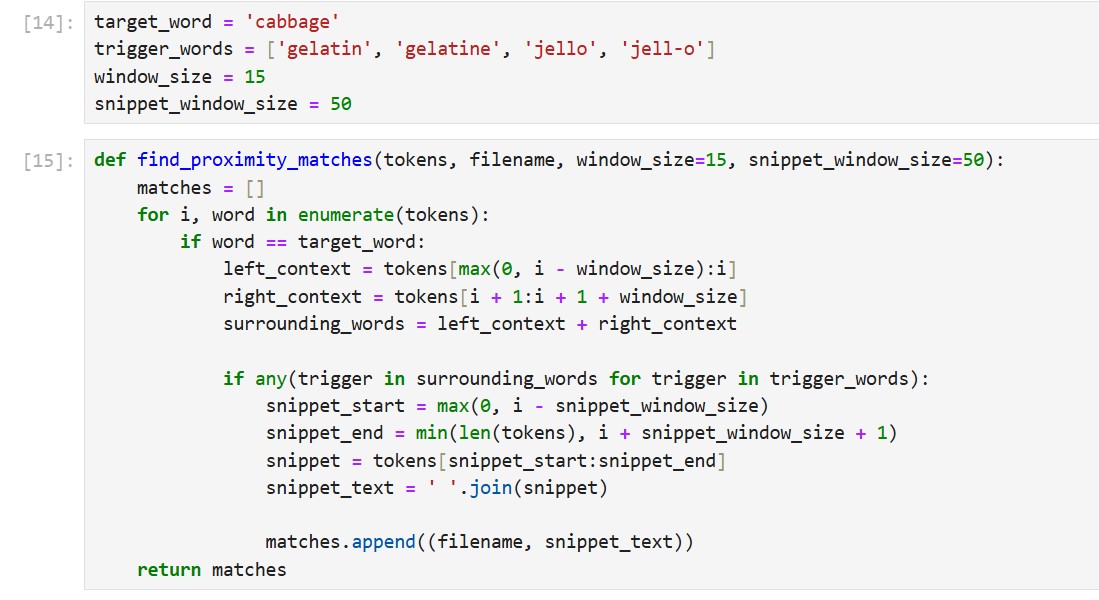

I have used Python and the Natural Language Toolkit to search for words and create a concordance before, but I have not ever used it to identify collocates, so I used ChatGPT to help me think through the steps necessary in Python and refine my code. The code excerpt below shows variables defined for the target word “cabbage” as well as the gelatin collocates or “trigger words” and the context window size of 15. While reviewing the results of my first test with this code I realized that the 15-word context window was not enough text for me to tell whether the recipe was truly a match, so I decided to print larger 50-word snippets for each result to make reviewing easier. The image below also shows my function “find_proximity_matches” which applies my defined variables, finds matches, and returns the filenames and text snippets.

The code goes on to cycle through each cookbook txt file in my corpus folder, opening each, tokenizing each using NLTK’s word_tokenize, and creating a list of matches. The first version of this notebook simply displayed the filenames and snippets on-screen which was great for making sure the function was working but not practical for reviewing all results. So, I added code to output all results to a csv.

One additional step I am considering is to merge the title, date, and organization from my metadata spreadsheet into the csv export of results so that I can easily see more information about the cookbooks without having to go to the image files. This extra information wasn’t necessary for this analysis since I needed to review the images anyway, but for a more polished workshop-ready Jupyter notebook I think this would be really helpful.

Examining and Cleaning the Python Output

Python identified 75 matches based on the search perimeters outlined above and generated a csv with the file name and 50-word snippet for each result. I was then able to review each snippet to see whether the generated results matched my definition of a Perfection-Salad-like recipe.

Some of the returned results were easily discarded because they were duplicates that occurred because one result was generated for each instance of cabbage occurring near the gelatin terms, so if cabbage appeared more than once in the same recipe and near enough to the other words, that recipe was returned twice in the search results. I was also able to discard results that were simply recipe names from a cookbook’s table of contents rather than the full recipe.

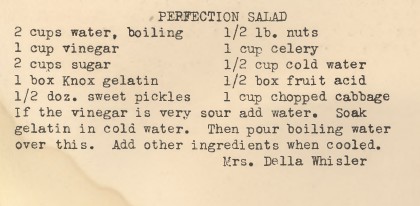

Other incorrect results required a little more investigation. One example appears in the 1940 Ladies Aid Society of the Christian Church cookbook from Delta, Iowa. The Python results identified one recipe from this book called “Vegetable Salad,” but when I looked for that recipe in the digitized cookbook I noticed another recipe on the same page called “Perfection Salad” that Python did not catch, and I was very curious why.

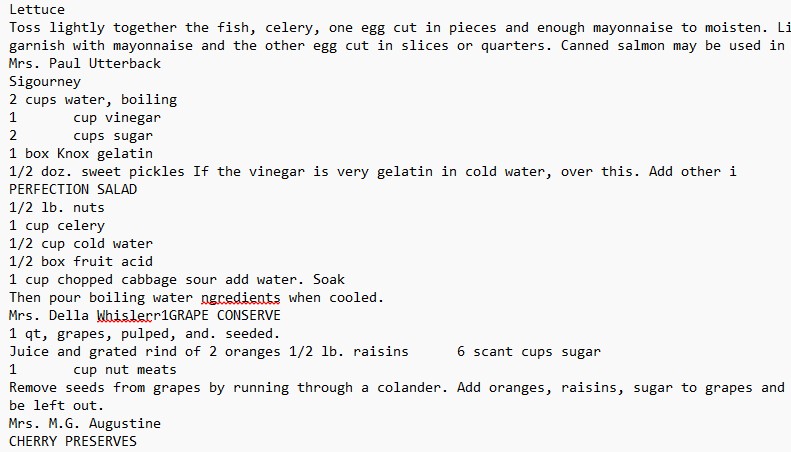

I programmed Python to search within 15 words on either side of the word “cabbage” for occurrences of “gelatin,” “gelatine,” or “jello.” The Perfection Salad recipe in the digitized image has the word “gelatin” occurring 12 words from the word “cabbage” on the left and 10 words from “cabbage” on the right. To determine why this recipe was not recognized, I took a look at the OCR txt file produced by ABBYY FineReader and noticed that the recipe for Perfection Salad is a mess in the OCR. Several recipes are garbled together and some words are missing entirely which has effected the number of words between “gelatin” and “cabbage” causing Python to miss this recipe. I have been using ABBYY FineReader OCR files for all analysis so far, but for the sake of testing and comparison, I have also been producing OCR files using Tesseract OCR. The image on the right below is the Tesseract version of the same Perfection Salad recipe. Much better. Throughout this project there has not been a consistant “winner” as far as OCR quality between ABBYY and Tesseract. Each do some things better than the other, but in this case the difference in OCR output quality was enough to effect my analysis - something I’ll need to consider how to manage as I continue with this project.

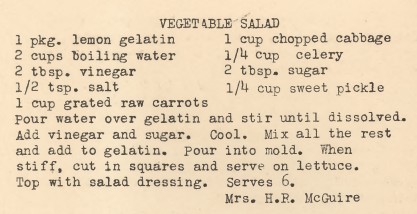

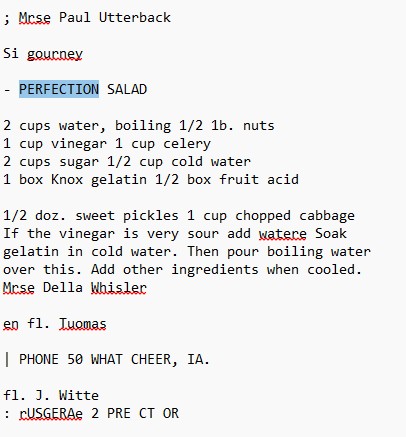

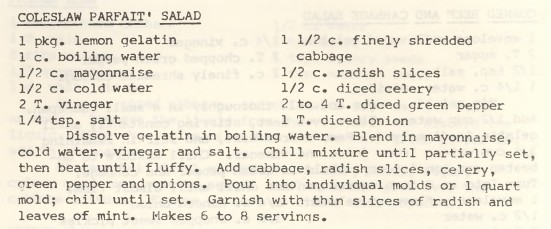

Below is one final example of a recipe that Python missed even though it fits my ingredient criteria for Perfection Salad:

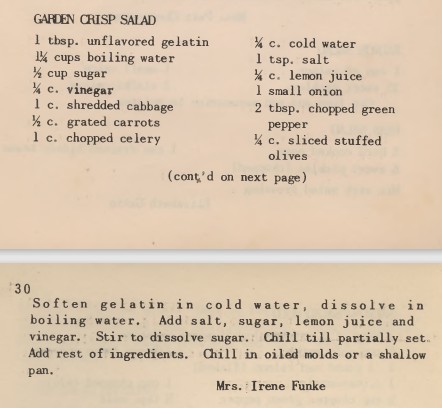

In this case, there are multiple occurrences of the word “gelatin” but all of them are outside of our context window of 15 words left or right of “cabbage.” Widening the context window would help catch examples like this; however, it would also identify many more recipes that I would have to discard because they do not qualify. For example, widening the context window could identify recipes that contain jello or gelatin that are next to other recipes that contain cabbage. Earlier I mentioned I used NLTK’s word_tokenize which splits a long text string into a list of tokens or “words.” Word_tokenize counts punctuation and numbers as “words.” So, an alternative to changing the context window would be to remove all numbers and punctuation from the text, lessening the number of “words” between ingredients in many cases and therefore bringing more occurrences within the current context window.

Final Results

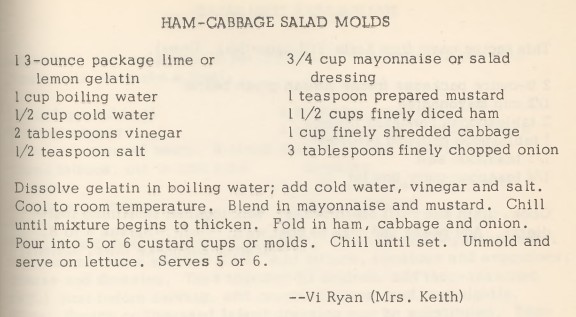

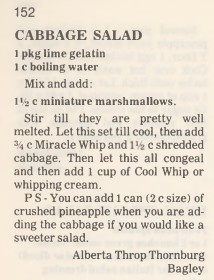

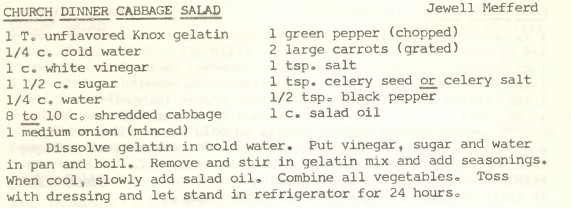

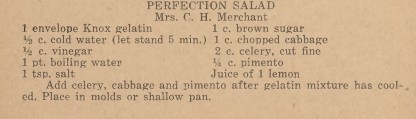

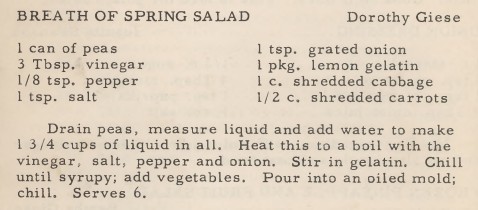

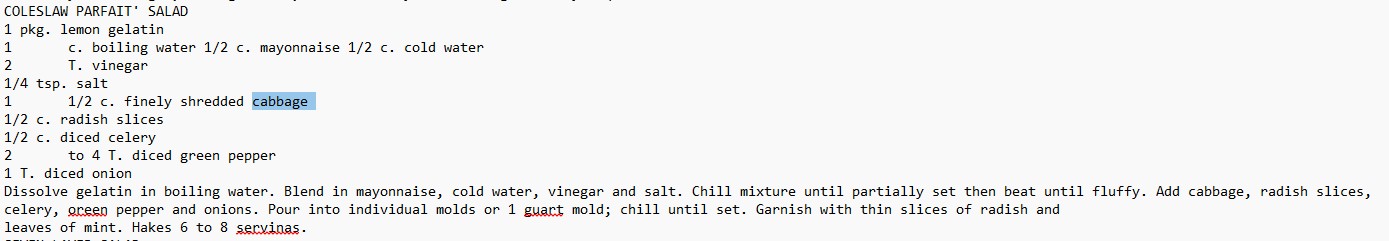

After reviewing and cleaning the results, I was able to identify 50 individual recipes resembling Perfection Salad in my test corpus of 100 cookbooks. Some are more true to the original molded salad, and some are more similar to the variation described in Part One where gelatin is used as a thickener for the slaw dressing. You’ll also notice some have titles that may have made them identifiable at a glance while others have more ambiguous titles that would have made identifying by human visual search much more difficult. Below are a few examples of recipes identified by the Python notebook: